The TL;DR

Containers are a way to run code in isolated boxes within an operating system; they help avoid disastrous failures and add some consistency to how you set up applications.

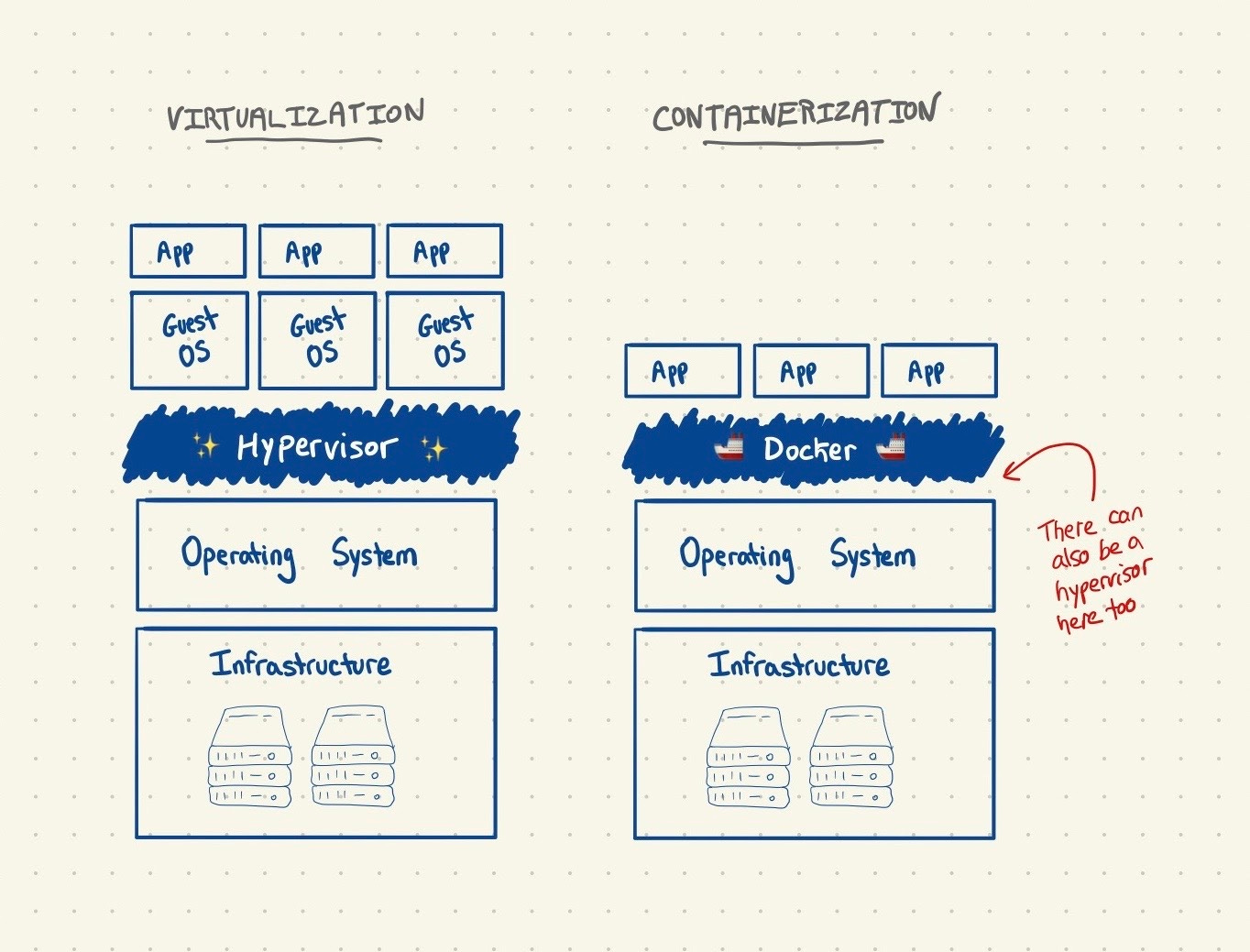

Special software called a hypervisor allows multiple isolated operating systems to run on one computer, and containers do the same for operating systems (🤯)

Containers also provide a consistent environment for deploying software, so developers don’t need to configure everything 1000 times

The most popular container engine is Docker: it’s open source and used by >30% of all developers

Containers (and Docker) have spawned an entire new ecosystem of exciting developer tools. You may have heard of Kubernetes, the *checks notes* greek god of container infrastructure. We’re not going to cover it in this post, but it, too, is Docker related. Needless to say, understanding these little container guys is a really important part of understanding modern software.

Social distancing, but for your bad code

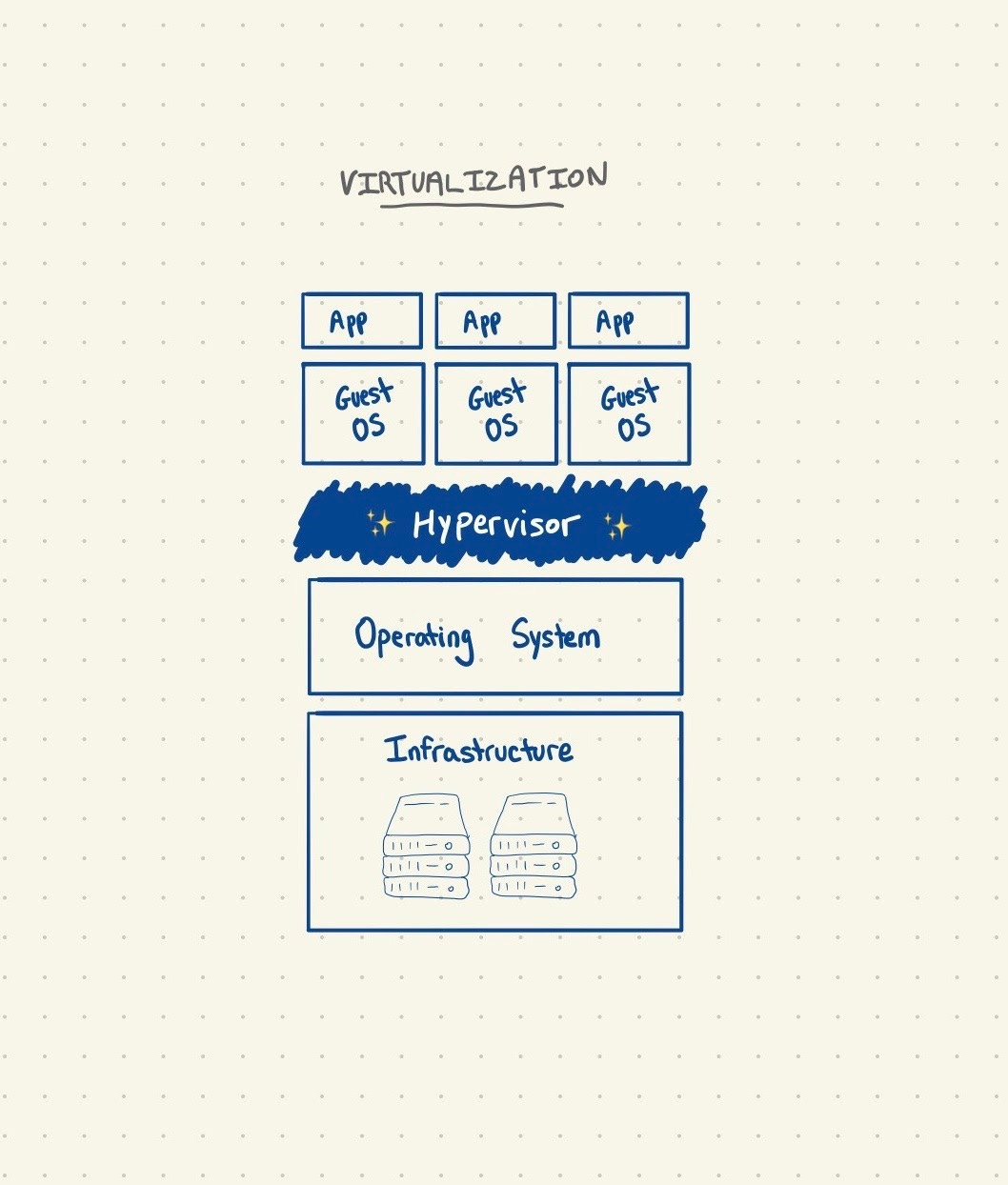

To understand Docker, you need to understand virtual machines.

The first post I ever wrote for Technically was about the cloud, and how companies run their software on big, rented servers instead of building that infrastructure themselves. The biggest provider of that cloud, AWS, is on track to make more than $40B this year (!), and it’s all thanks to one technology: the hypervisor. The hypervisor allows AWS to run code from multiple different people on one server instead of giving everyone their own; it’s a transformational way of building things. But why is this important? And why is it hard to do?

The big hairy truth of software is that your code is going to fail, and it’s going to cause problems. Even those elusive 10x engineers can never quite predict everything (like server environments, but more on that later). And when your code fails, it can blow shit up on your operating system; if you’ve ever had to restart your computer because a malfunctioning application froze your whole OS, you can metaphorically raise your hand.

If you want to cram as many people’s code onto a server as you can (what AWS does), you need to solve this error problem; you can’t let one company’s code take down everyone else’s. Hypervisors give you the ability to split one server (one computer) into multiple self contained “boxes” with individual operating systems and infrastructure (CPUs, RAM, storage). These are called virtual machines (VMs, for short), and this is what you’re getting with AWS EC2.

⛓ Related Concepts ⛓

Virtual machines are the ABCs of cloud, and most companies on a public cloud are probably using them in some form in another. But it’s not just for them: a lot of AWS’s (and GCP’s and Azure’s) more advanced products are built on top of virtual machines under the hood. Virtualization was, and is, a pretty important development.

⛓ Related Concepts ⛓

Now that you (hopefully) understand the concept of isolation and how virtualization (“creating virtual machines”) helps get there, containers are a simple, natural extension of that concept. The problem with hypervisors is that they all run their own operating systems, which take a long time to start from scratch – containers let you run multiple, isolated environments on one operating system. That operating system can be virtual (on a server somewhere), or it can even be on your laptop. So when you run containers on a public cloud server, you’re basically doing isolation2 - a “boxed” container on top of a “boxed” virtual machine. 🤯

Most importantly: because multiple containers can run on a single operating system, they’re much faster to spin up.

Developer environments suck

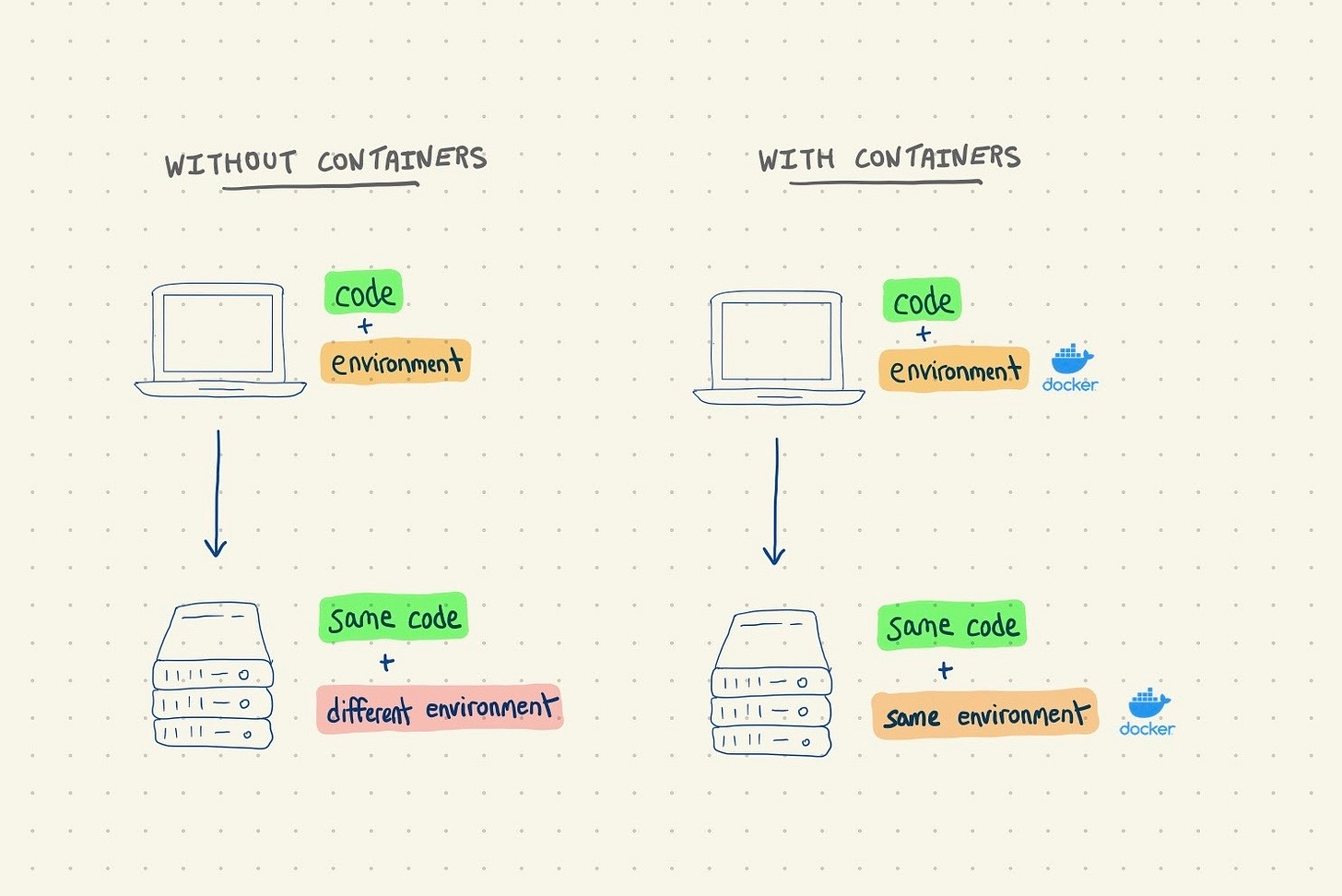

Isolation isn’t the only thing that containers bring to the table. A problem that constantly plagues developers is the environment that their code runs in. Code has a lot of dependencies:

Libraries and frameworks

Environment variables

Language versions

Access to data and plugins

Networking between services

All of these (and more!) make up your environment. Every time you install or upgrade a new package, switch to a language version, or allow configure a connection to a database, you’re changing your environment.

😰 Don’t sweat the details 😰

If you’re not sure what any of the individual bullet points mean, don’t sweat it. They’re all code or code-adjacent configurations that impact how your code runs in a given environment.

😰 Don’t sweat the details 😰

The typical workflow for developers is to develop locally and then deploy to server. So you’ll get code working on your laptop, and then drop it off on a server to run; but that server will almost never have a matching environment to your laptop. That’s why there’s constant debugging to do when you deploy your app.

To make this a bit more concrete, take a look at a few of these kinds of errors that I’ve personally run into:

App built on my laptop in Python uses Python 2, but the server has Python 3 installed

App built on my laptop requires access to my database, but the server is having trouble connecting to it

App built on my laptop needs to write to the

/usr/bin/localfolder, but the server doesn’t have permission

These problems are the worst, and they’re a constant when you’re building and deploying software. But what if you could develop and deploy your code in the same environment, with the same things installed with the same versions? Docker lets you do that. If you build your app in a Docker container on your computer, you can deploy that container – packaged together with all of the dependencies you need – to a server.

It’s like moving houses, but keeping all of your furniture. And the wallpaper.

Docker 101

Docker is an app like any other: you can download it from the Docker website, and you’ll see a little icon with preferences and stuff. When you’re running Docker on a server though, it’s all through the command line. Let’s run through some of the basics:

Docker images

The basic starting point for a Docker container is an image. An image is like a blueprint for what you want your containers to look like: once you’ve built an image, you can create infinite containers from it that look exactly the same. A Docker image is just a static file, which makes it very sharable: Docker even has a product called Docker Hub where you can host and share your container images.

The Dockerfile

The Dockerfile (aptly named) is how you actually build a Docker image. It’s just a text file with a series of commands that tell the operating system what to do when you’re building a container: install this version of this package, run this script, open this port, etc. It’s common practice to use someone else’s publicly available Docker image as a base and build from there (this is usually called “pulling” an image).

Orchestrating containers for scale - 🤩 Kubernetes 🤩

The terminology around Docker and containers is a lot like virtual machines: you spin them up and spin them down. That’s because another major benefit of a containerized architecture is easier scale. If your application is growing and needs more resources, you can spin up new Docker containers easily. Docker takes care of networking them together and other low level utilities like that; but that’s just the start. This is where Kubernetes comes in.

Big apps (and today, for some reason, even small ones) can be made up of a bunch of Docker containers: one for the app, one for the database, one for adjacent services, and so on. Orchestrating this complex workflow and making sure the right containers are running at the right time (and that they can talk to each other) is a hard job, and that (in a nutshell) is what Kubernetes does. Docker also has Swarm, which takes on a similar, more tightly scoped version of this. More on that in a future post.

Learning more

This is just a basic intro to containers. If you’re looking to learn more, here are a few areas to dive deeper into:

Managed services

As containers have become more ingrained in the developer workflow, managed services have begun popping up. They automate parts of the infrastructure you’d need to run containers. The best example is AWS’s ECS (elastic container service), which takes care of deploying containers and some orchestration tasks too. AWS also offers Fargate, a serverless (think: no infrastructure management) option for deploying containers.

Kubernetes and orchestration

Kubernetes is really popular, and not just because of all of my jokes on Twitter about it; it’s becoming one of the (almost) default ways that modern apps are getting deployed, for better or for worse. Kubernetes, or K8s for short, is a very complicated piece of software to work with, and there are a lot of “shiny new thing” decisions being made. Your basic web app didn’t need Kubernetes 10 years ago and it probably doesn’t need it now.

Networking and volumes

A lot of the “environment” problem comes down to connecting things together. If your data is in one container and your main app service in another, they need to correct configuration to talk to each other without letting just anyone in. Docker has an entire networking layer that takes care of this.

A related problem – since containers are ephemeral, systems need a way to keep important data around (if you destroyed data when you destroyed containers, we’d never remember anything). Docker’s Volumes functionality helps persist that data so you can continue working with it.

Serverless and lightweight virtualization

Containers are all the rage, but as with literally everything in software, there’s something new-er and cool-er on the horizon. One of those things is serverless (post coming soon!) – it’s a way of deploying functions instead of an entire app, and avoiding having to work with any infrastructure (like VMs) at all. AWS is also working on a new lightweight virtualization tool called Firecracker that has the potential to re-paint this entire picture.

Terms and concepts covered

Virtual machine, virtualizationHypervisorIsolationEnvironmentDockerImageDockerfileOrchestrationKubernetesNetworkingVolumes(phew)

For some reason this popped up in my feed or a search. I even wrote a book on some of this stuff (Cloud without Compromise — more on the K8s side) and I STILL GOT SOME GREAT VALUE from this. Thank you!!

I always compare VMs to my days in Java. "Write once test everywhere .... " .... I remember those JDKs days ... gong show.

Really technically. I recently learned how to use Docker. However, the image is like the template? correct me if I'm wrong.